Recently I deployed a new SDDC based on the latest vSphere 6.7 release. VMware is really pushing the HTML5 client and that is absolutely fine by me but it’s still not 100%. I’m really wondering if the U1 release targeted for Fall 2018 is improving the customer experience. Anyway, the point now is that I’m completely missing the VSAN menu options in the HTML5 client so the fallback is the FLEX based client which is working. Since I didn’t wanted to waste my time to debug everything I raised a Support Request.

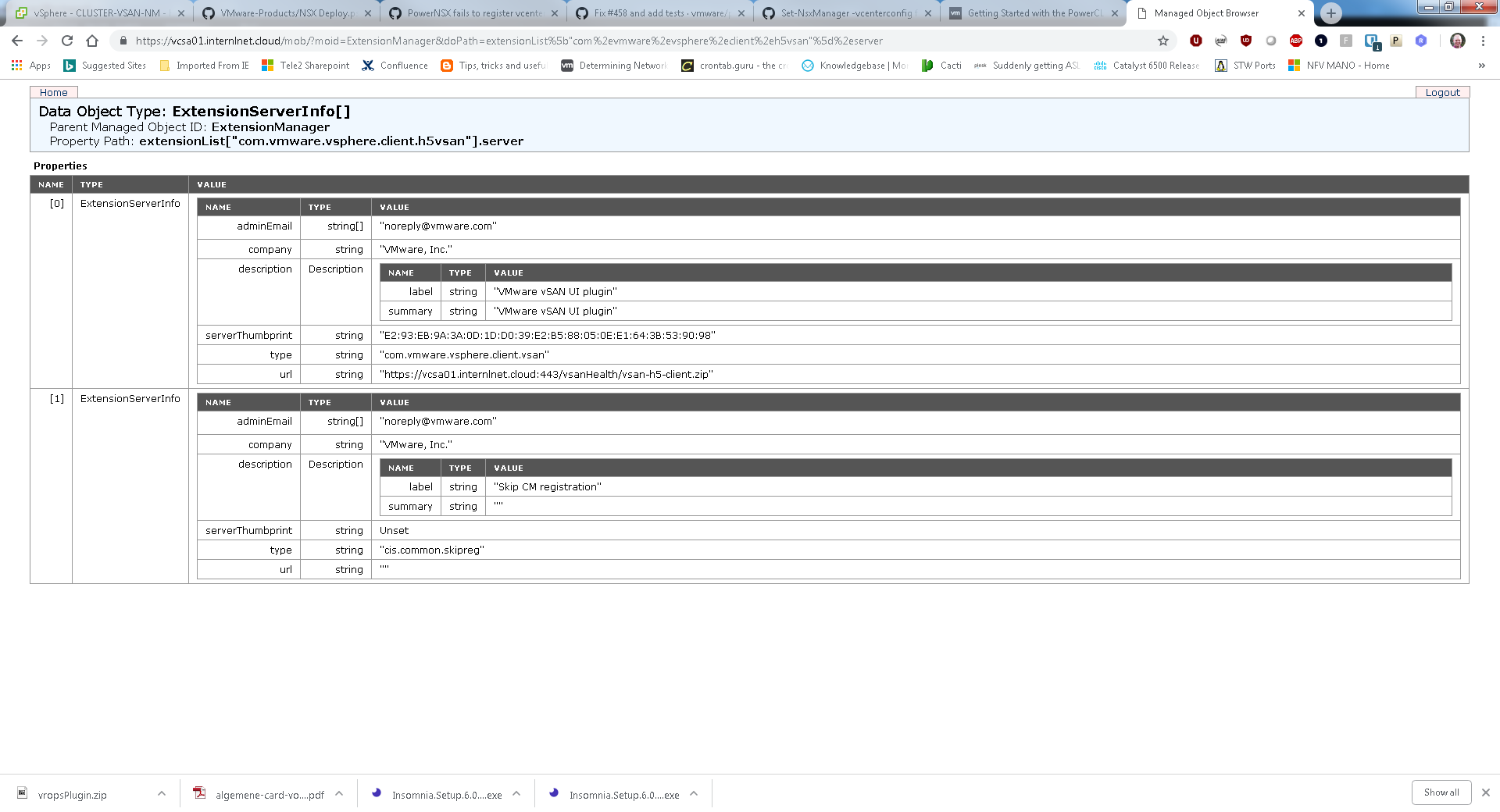

After several weeks they have found the issue due a thumbprint mismatch 🙁 Let’s explain a bit more about the configuration I’m running. It’s a vCenter with load balanced platform controllers with custom (proper) certificates. The thumbprint doesn’t get updated when you install custom certificates. But there is a work-arround (thanks Peter) to get it working.

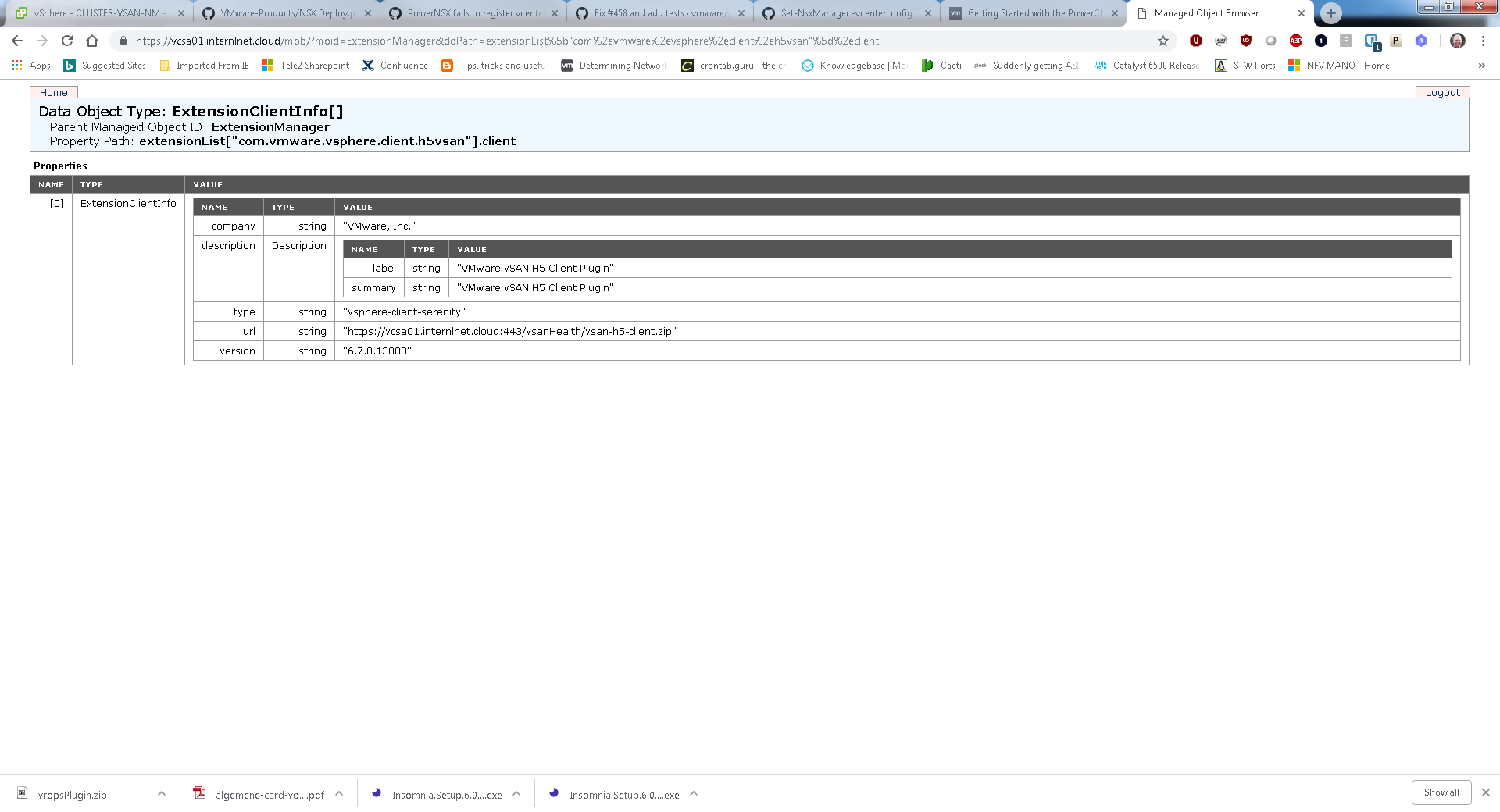

Let’s analyse the logs first why the H5VSAN plugin is not loading.

[2018-10-02T08:41:29.955Z] [INFO ] vc-extensionmanager-pool-242 70000299 100012 200003 com.vmware.vise.vim.extension.VcExtensionManager Downloading plugin package from https://vcsa01.demo.cloud:443/vsanHealth/vsan-h5-client.zip (no proxy defined) [2018-10-02T08:41:29.979Z] [WARN ] vc-extensionmanager-pool-242 70000299 100012 200003 com.vmware.vise.vim.extension.VcExtensionManager Package com.vmware.vsphere.client.h5vsan was not installed. Error downloading https://vcsa01.demo.cloud:443/vsanHealth/vsan-h5-client.zip. Make sure that the URL is reachable then logout/login to force another download. |

A normal local wget on the appliance is just downloading fine just like through the browser so the error is not very use full.

The only way to force the extensions to be activated on the appliance is to do it manually.

Create a directory with the name extensionsList[“XXX”]-[version] and unzip the contents of the zip file:

root@vcsa01 [ ~ ]# tail -f /storage/log/vmware/vsphere-client/logs/vsphere_client_virgo.log root@vcsa01 [ ~ ]# mkdir -p /etc/vmware/vsphere-ui/vc-packages/vsphere-client-serenity/com.vmware.vsphere.client.h5vsan-6.7.0.13000 root@vcsa01 [ ~ ]# wget https://vcsa01.demo.cloud/vsanHealth/vsan-h5-client.zip root@vcsa01 [ ~ ]# unzip vsan-h5-client.zip -d /etc/vmware/vsphere-ui/vc-packages/vsphere-client-serenity/com.vmware.vsphere.client.h5vsan-6.7.0.13000 root@vcsa01 [ ~ ]# rm vsan-h5-client.zip root@vcsa01 [ ~ ]# service-control --stop vsphere-ui root@vcsa01 [ ~ ]# service-control --start vsphere-ui |

After this the HTML5 client for VSAN is loading correctly! This same principle is applicable for other extensions.